3Dセマティックセグメンテーションを用いた実環境における家具再配置シミュレーション

Semantic Segmentation of 3D Point Cloud to Virtually Manipulate Real Living Space

2018

石川礼,八馬遼,家永直人,久能若葉,杉浦裕太,斎藤英雄

Yuki Ishikawa, Ryo Hachiuma, Naoto Ienaga, Wakaba Kuno, Yuta Sugiura and Hideo Saito

[Reference /引用はこちら]

Yuki Ishikawa, Ryo Hachiuma, Naoto Ienaga, Wakaba Kuno, Yuta Sugiura, Hideo Saito, Semantic Segmentation of 3D Point Cloud to Virtually Manipulate Real Living Space, 2019 12th Asia Pacific Workshop on Mixed and Augmented Reality (APMAR), Ikoma, Nara, Japan, 2019, pp. 1-7. [DOI]

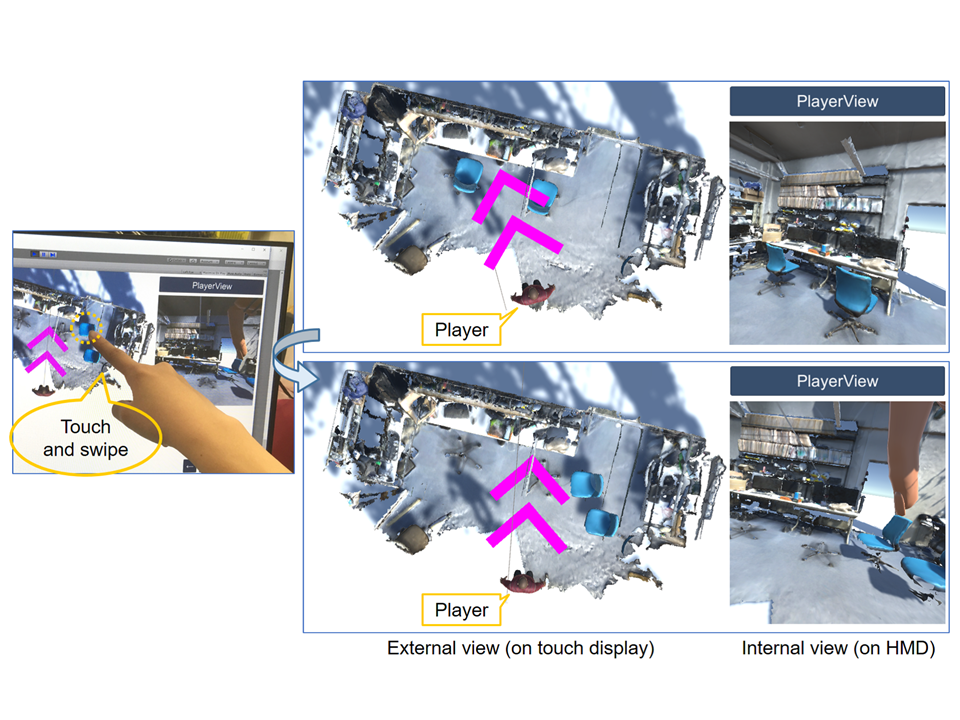

本論文では、屋内の3次元点群に対するセマンティックセグメンテーションによって、現実の生活空間に存在する家具を仮想的に再配置する手法を提案する。提案手法では、まずRGB-Dカメラから取得された屋内環境の3次元点群に対してセマンティックセグメンテーションをする。セマンティックセグメンテーション手法としてはPointNetを採用する。この際、PointNetでは考慮されていない局所的な形状情報に着目し、PointNetの出力で各点に付けられたラベルのクラス確率を補正する。そして、点群を物体ごとにクラスタリングすることで、個々の家具の点群を取得する。最後に、仮想レイアウトシステムであるDollhouse VRを用いることで家具の再配置を行う。実験では、スマートフォンから取得された屋内環境の3次元点群に提案手法を適用することで、クラスラベルの補正と、Dollhouse VRによる仮想操作シミュレーションができることを示す。

This paper presents a method for the virtual manipulation of real living space using semantic segmentation of a 3D point cloud captured in the real world. We applied PointNet to segment each piece of furniture from the point cloud of a real indoor environment captured by moving an RGB-D camera. For semantic segmentation, we focused on local geometric information not used in PointNet, and we proposed a method to refine the class probability of labels attached to each point in PointNet’s output. The effectiveness of our method was experimentally confirmed. We then created 3D models of real-world furniture using a point cloud with corrected labels, and we virtually manipulated real living space using DollhouseVR, a layout system.