Grasping Hand Pose Estimation from RGB Images Using Digital Human Model by Convolutional Neural Network

2017

稲生健太郎,家永直人,杉浦裕太,斎藤英雄,宮田なつき,多田充徳

Kentaro Ino, Naoto Ienaga, Yuta Sugiura, Hideo Saito, Natsuki Miyata, Mitsunori Tada

[Reference /引用はこちら]

Kentaro Ino, Naoto Ienaga, Yuta Sugiura, Hideo Saito, Natsuki Miyata, Mitsunori Tada, Grasping Hand Pose Estimation from RGB Images Using Digital Human Model by Convolutional Neural Network, in Proc. of 3DBODY.TECH 2018 – 9th Int. Conf. and Exh. on 3D Body Scanning and Processing Technologies, 154-160, October 16-17, 2018, Lugano, Switzerland. [DOI]

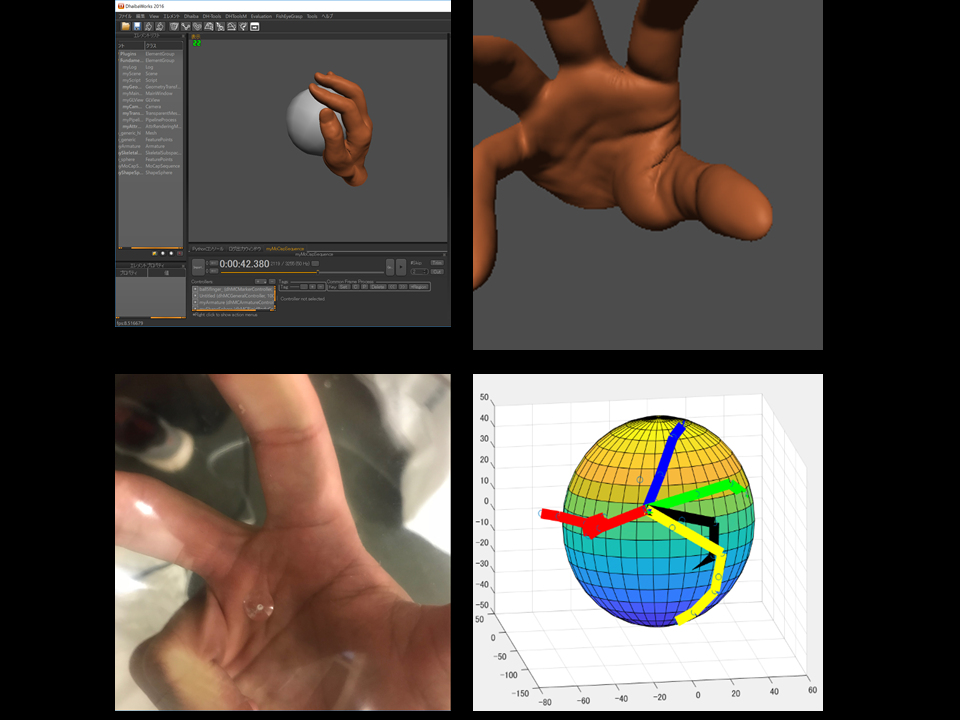

本稿ではRGB画像から手の3次元位置姿勢を推定する手法を提案する。モーションキャプチャの記録を参照して手の姿勢を出力することができるソフトウェアであるDhaibaWorksを用いてデータセットを作成する。CNNを用いて手が映った画像とその時のマーカー位置を入力として学習し、未知の画像について位置を推定する。

We propose a method estimating its 3D pose in regular RGB image. We created a dataset using DhaibaWorks, which is a software that can output the most suitable hand pose in digital world, referring to the record of motion capture. For motion capture, we put 28 markers on a hand and 4 markers on the surface of a grasping object. Position of markers on the hand will be a feature points to be estimated. Then using convolutional neural network(CNN), we generated a model that learned the combination of RGB image of a hand and the 3D position of its feature points.